Abstract

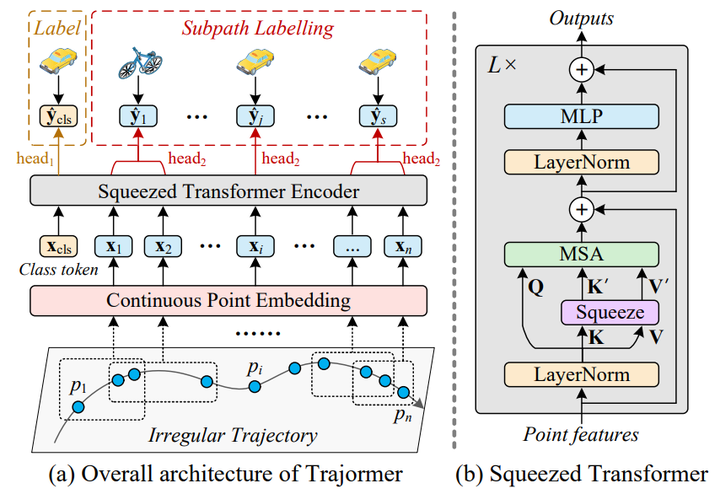

Transformers have been an efficient alternative to recurrent neural networks in many sequential learning tasks. When adapting transformers to modeling trajectories, we encounter two major issues. First, being originally designed for language modeling, transformers assume regular intervals between input tokens, which contradicts the irregularity of trajectories. Second, transformers often suffer high computational costs, especially for long trajectories. In this paper, we address these challenges by presenting a novel transformer architecture entitled TrajFormer. Our model first generates continuous point embeddings by jointly considering the input features and the information of spatio-temporal intervals, and then adopts a squeeze function to speed up the representation learning. Moreover, we introduce an auxiliary loss to ease the training of transformers using the supervision signals provided by all output tokens. Extensive experiments verify that our TrajFormer achieves a preferable speed-accuracy balance compared to existing approaches.